Here's a list of some of the projects I am (or I have been) involved with.

Surgical Simulation We're working on creating a multi-user surgical simulator where users can interact with the simulated scenarios through stereo-graphics and haptic interfaces. The project, which is funded by NIH, is divided in various parts described below.

|

||

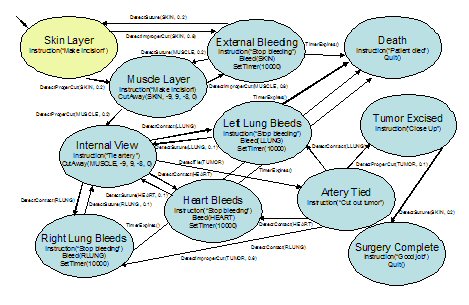

An Event-Driven Framework for the Simulation of Complex Surgical Procedures Existing surgical simulators provide a physical simulation that can help a trainee develop the hand-eye coordination and motor skills necessary for specific tasks, such as cutting or suturing. However, it is equally important for a surgeon to gain experience in the cognitive processes involved in performing an entire procedure. The surgeon must be able to perform the correct tasks in the correct sequence, and must be able to quickly and appropriately respond to any unexpected events or mistakes. It would be beneficial for a surgical procedure simulation to expose the training surgeon to difficult situations only rarely encountered in actual patients. We present here a framework for a fullprocedure surgical simulator that incorporates an ability to detect discrete events, and that uses these events to track the logical flow of the procedure as performed by the trainee. In addition, we are developing a scripting language that allows an experienced surgeon to precisely specify the logical flow of a procedure without the need for programming. The utility of the framework is illustrated through its application to a mastoidectomy. Where:

Stanford

Robotics Lab,

Stanford School of Medicine

Collaborators: Christopher Sewell, Nikolas Blevins, Kenneth Salisbury

|

|

|

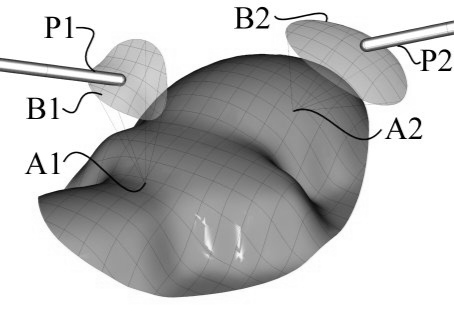

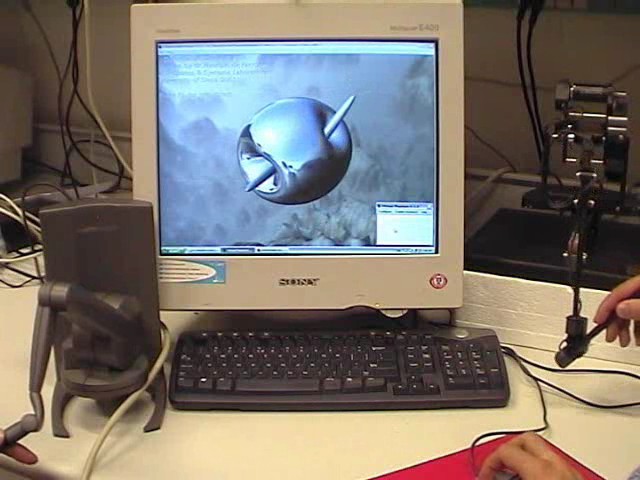

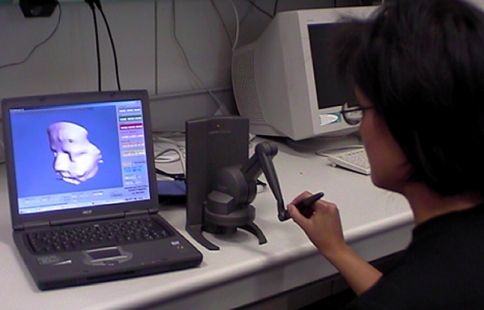

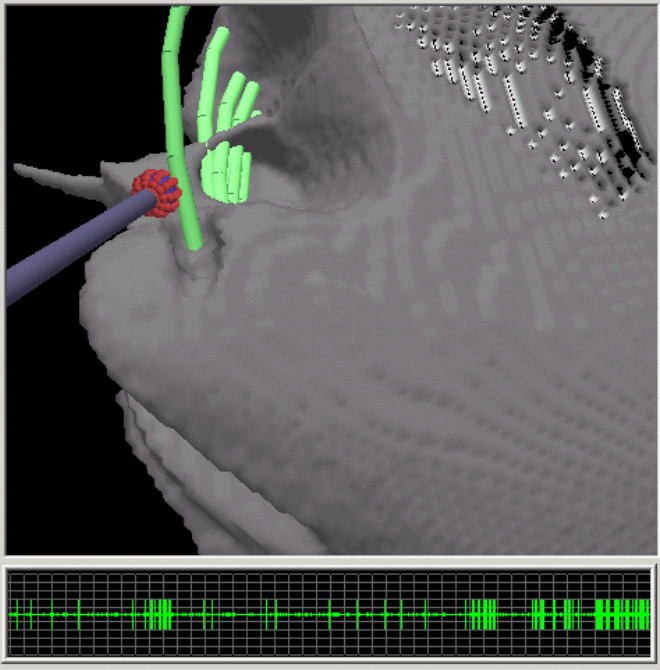

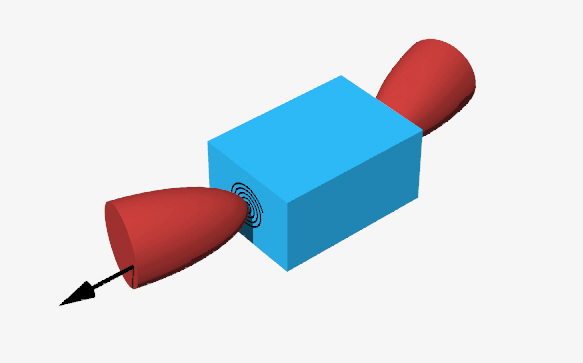

Simulation of Temporal Bone Surgery We created a framework for training-oriented simulation of temporal bone surgery. Bone dissection is simulated visually and haptically, using a hybrid data representation that allows smooth surfaces to be maintained for graphic rendering while volumetric data is used for haptic feedback. Novel sources of feedback are incorporated into the simulation platform, including synthetic drill sounds based on experimental data and simulated monitoring of virtual nerve bundles. Realistic behavior is modeled for a variety of surgical drill burrs, rendering the environment suitable for training low-level drilling skills. The system allows two users to independently observe and manipulate a common model, and allows one user to experience the forces generated by the other’s contact with the bone surface. This permits an instructor to remotely observe a trainee and provide real-time feedback and demonstration. Where:

Stanford

Robotics Lab,

Stanford School of Medicine Collaborators: Dan Morris, Christopher Sewell, Nikolas Blevins, Kenneth Salisbury

|

|

|

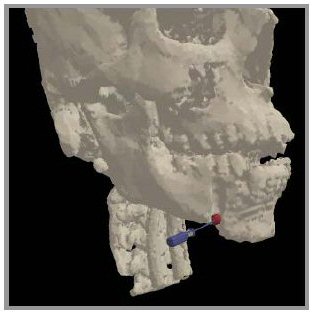

Craniofacial

Surgery Simulation Where:

Stanford

Robotics Lab,

Stanford School of Medicine

Collaborators: Dan Morris, Sabine Girod, Ken Salisbury

|

|

|

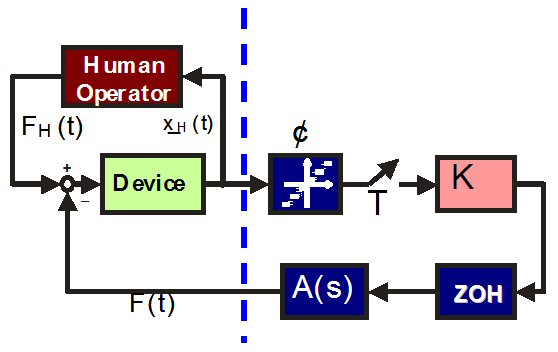

Haptic Interface Control I'm currently working on various projects involving the analysis of stability for haptic devices and the synthesis of better control algorithm allowing rendering of a wider range of impedances.

|

||

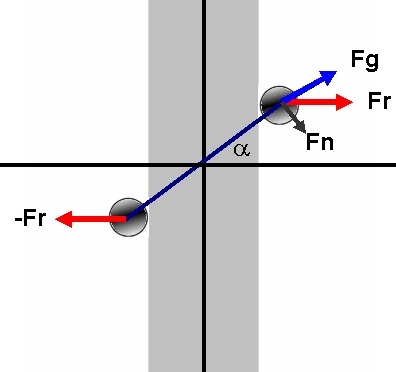

Passivity analysis of Haptic Devices Stability of haptic devices has been studied in the past by various groups (Colgate, Hannaford, Gillespie, ...). Past analysis has not focused on quantization, Coulomb friction and amplifier dynamics. Our work extends such results. Where:

Stanford

Robotics Lab,

Stanford Telerobotics

Lab, LAR-DEIS

University of Bologna Collaborators: Nicola Diolaiti, Gunter Niemeyer, Ken Salisbury, Claudio Melchiorri

|

AAAA |  |

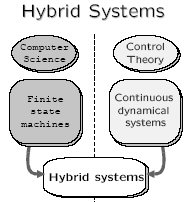

Haptic Devices as Hybrid Systems We're currently analyzing stability of haptic devices using Hybrid System concepts. Hybrid models describe systems composed of both continuous and discrete components, the former typically associated with dynamical laws (e.g., physical first principles), the latter with logic devices, such as switches, digital circuitry, software code. As a part of this we're extending the concept of passivity to Hybrid Models. Where:

DII

University of Siena, Stanford

Robotics Lab Collaborators: Filippo Brogi, Alberto Bemporad, Gianni Bianchini

|

|

|

CHAI3D

Where:

many

places Collaborators: Francois Conti, Dan Morris, Chris Sewell, Yuka Teraguchi, Doug Wilson, Maurice Halg, ...

|

||

|

Redundant

and Mobile Haptic interfaces Haptic

devices normally feature fairly small workspaces Where:

DII University of Siena Collaborators: Alessandro Formaglio, Max Franzini, Antonello Giannitrapani, Domenico Prattichizzo papers

|

|

|

|

Multi-point haptic interaction One of my main interests is in studying multi-point interaction with virtual objects. Past experience has shown that the simple single-point contact interaction metaphor can be surprisingly convincing and useful. This interaction paradigm imposes, however, limits on what a user can do or feel. Single point of contact interaction makes it impossible for a user to perform such basic tasks as grasping, manipulation, and multi-point exploration of virtualized objects, thus restricting the overall level of interactivity necessary in various applications (such for instance as surgical training). Pushing haptic interfaces beyond this limits has been, and still is, one of my main goals. Some of the aspects I have focused on are described in the following. |

||

|

Stable

Multi-point haptic interaction with deformable objects Where:

Stanford Robotics

Lab, DII University of Siena Collaborators:

Ken Salisbury, Remis Balaniuk, Domenico Prattichizzo, Maurizio

de Pascale, Gianluca de Pascale |

||

|

Soft Finger Proxy Algorithm Point contact, i.e. one that can exert forces in three degrees of freedom, combines a high level of realism with simpler collision detection algorithms. Using two or more point contacts to grasp virtual objects works well but has one main drawback: objects tend to rotate about their contact normal. A simple way to avoid this, one that does not increase complexity, is allowing point contacts to exert torsional friction about contact normal. The grasping community refers to this type of contact as a "soft finger". In our work we have proposed a soft finger proxy algorithm. In order to tune such algorithm to fit the behavior of human fingertips we have considered various fingerpad models proposed in the past by the biomechanics community, derived their torsional friction capabilities, and compared them to experimental data obtained on a set of five subjects. Where:

Stanford Robotics

Lab Collaborators:

Ken Salisbury, Roman Devengenzo, Antonio Frisoli, Massimo Bergamasco |

|

|

|

Psychophysics of multi-point contact It has been proven in the past that humans perceive objects' shape in a faster and more efficient way when using their hands to their full capability, i.e. when using all ten fingers at the same time. In this ongoing project we're trying to understand if the same results are true for shape perception mediated by haptic devices allowing multiple-point interaction. Is multi-point kinesthetic feedback enough to allow users better perceptual capabilities of objects shape? Can tactile feedback help in this sense? Where:

Stanford Robotics

Lab, PERCRO |

||

|

Sensor/actuation

asymmetry for haptic interfaces Haptic

interfaces enable us to interact with virtual objects by sensing

our actions and communicating them to a virtual environment. A

haptic interface with force feedback capability will provide sensory

information back to the user thus communicating the consequences

of his/her actions. Where:

Stanford Robotics Lab Collaborators:

Ken Salisbury, Gabriel Robles-De-La-Torre (psychophysical tests).

|

|

|

|

Haptic Media Types Embedding haptic elements inside different media types may be one of the most promising, and yet conceptually simple, applications of force-feedback. Letting users touch a product before they purchase it online, creating e-books where readers can interact with the story being narrated, allowing readers to test the results proposed in haptic-related scientific publications in electronic form - all these simple ideas would allow haptics to become a more common and useful everyday tool. We (Unnur for the most part) have developed an activeX controller that allows to embed haptic scenes inside HTML pages and PPT presentations. The first version (contact me for the code... it's open source even though a bit messy) only supported Phantom haptic devices. Currently we're working (Pierluigi for the most part) at a more general object that will be embeddable in PDF documents as well as HTML and PPT. We're also interested in testing how this technology can be used in online shopping and interactive electronic books scnenarios.

Where: DII

University of Siena, Stanford

Robotics Lab Collaborators: Unnur Gretarsdottir, Pierluigi Viti, Kenneth Salisbury papers

|

|

|

|

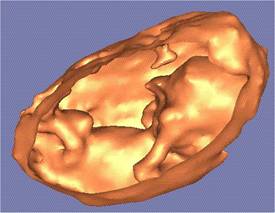

Haptic

Interaction with fetuses Where:

DII

University of Siena Collaborators: Berardino LaTorre (who has been the main programmer behind this), Domenico Prattichizzo, Antonio Vicino, Siena University Medical School. Some of the algorithms developed for Fetouch are now being used in another project.

The primary

goal of this project is to create a virtual-reality based system

for training physicians, nurses, and allied health care professionals

in newborn resuscitation. We're currently developing a first prototype

of the system which will allow trainees to physically examine

the virtual baby, perform critical technical interventions, and

develop the cognitive and motor skills necessary for caring for

real human patients. Interesting research aspects include: creating

a realistic physical model of the baby from CT and MRI scans;

create a model of the baby relating physiologic, anatomic, and

behavioral characteristics; create haptic rendering algorithms

for some basic interventions (chest compression, feel a pulse

by pinching umbilical, ventilation, intubation). Where:

DII University of Siena,

Stanford Center

for Advanced Pediatric Education (CAPE) Collaborators: Berardino La Torre, Louis Halamek, Allison Murphy, Domenico Prattichizzo papers

(check back soon)

|

|

|

|

Pure Form

The "Museum of Pure Form" was conceived in 1993 by Professor

Massimo Bergamasco which was my Ph.D. advisor at PERCRO. However,

it wasn't until the end on the 90s that the project was funded

by the IST program of the EU. I was very lucky to be involved

in the project from the beginning, and participated at various

levels (grant

writing, managing contacts with museums,

haptic rendering algorithms). The system is now been showcased

in various European museums, and a collection of 3D digital models

of statues has been created by using 3D scanners. Users can physically

interact with statues, something that would normally be impossible

(or, I guess, not advisable). For more information on the project

please visit www.pureform.org.

Where:

PERCRO (Pisa), Centro

Galego de Arte Contemporánea, University

College London (UCL) Collaborators:

Massimo Bergamasco, Antonio Frisoli, all the folks at PERCRO

|

|

|

|

Motion-base simulators: Moris

This was the first major project I was involved with at PERCRO

during my PhD. Moris is a 7DOF motion-base motorcycle simulator

based on a hydraulically actuated Stewart platform. While similar

ideas have been implemented for flight simulators, Moris was,

at the time (1999), the most advanced motorcycle simulator ever

built. Moris was built with and for PIAGGIO (the makers of Vespa

scooters) and is currently located at their headquarters in Pontedera,

Italy. Where:

PERCRO (Pisa), Piaggio

Collaborators:

Carlo Alberto Avizzano, Diego Ferrazzin, Giuseppe Prisco, Massimo

Bergamasco. |